這是我為台大羅老師的 Robotics Sensing and Control 課程所設計的期末專題,有興趣的朋友們也可以玩玩看。

921 U8930: Robot Sensing and Control

Final Project: Unmanned Guided Vehicle (UGV)

Objective:

To design a robot that can navigate through an obstacle course and deliver a payload to the designated target location.

Background Information:

Unmanned Guided Vehicles (UGVs) are used to perform routine tasks for industry, as well as being used in areas hazardous to humans. Machine vision can provide such vehicles with 'sight', allowing them to understand their surroundings and leading to more flexible use of UGVs. Other sensors may be used to collect more data from the surroundings, and data from multiple sensors may be “fused” and combined to derive more complete data of the environment. Such sensors may include LIDAR sensors, IMU (Inertial Measurement Units), ultrasonic sensors, and GPS sensors. Real-world use of UGVs include military applications, terrain exploration, automated “driver” or driving assistance, and consumer applications (robot “helpers” or “maids”.)

Fig.1: UGVs in military, automotive, and consumer use

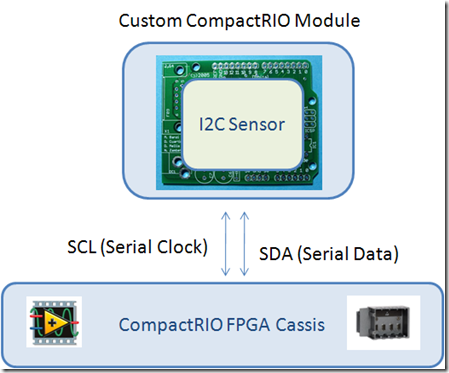

For this project, you will be using the NI CompactRIO system to help you integrate various sensors, vision systems, and motion control. By understanding how NI LabVIEW works as a graphical programming language, you will be able to assemble the critical components of a robotics system.

Rules of the Challenge:

1. Robots start at the “Start Point”. There will be 6 red cones scattered randomly about the area. The field is surrounded by a wall or fence. The waypoints will have brightly colored flags or markers for indication.

2. Robot has to navigate to the first waypoint to “pickup” payload. For the purposes of this challenge, the robot only has to physically touch the waypoint and wait for 5 seconds.

3. Robot has to navigate to the second waypoint to “deliver” payload. Again, the robot only has to physically touch the waypoint and wait for 5 seconds.

4. Robot has to navigate back to the “Start Point” and standby for further instructions.

5. Each group gets 3 runs in the obstacle course. We will take the run with the shortest time as your best run. The team that has the shortest course time will receive 5 bonus points to your overall score.

Scoring scheme:

Robot reached Waypoint 1: 10 points

Robot reached Waypoint 2: 10 points

Robot returned to Start Point: 10 points

Bonus Points: 5 points (awarded to the team with the shortest time)

Penalties:

1. If the robot physically touches a cone, 2 points will be deducted.

2. If the robot physically touches the wall or fence, 5 points will be deducted.

3. If the robot needs to be reset, no penalty will be taken, but the timer will not stop. You must return your robot to the Start Point to try again.

4. Except for returning the robot to the Start Point, you may not interfere with the robot while it is navigating the obstacle course (i.e. nudging, tilting, or pushing the robot.) The robot must be able to finish the course autonomously to receive full points. Interfering with the robot will disqualify the current run, and the course time will not be considered valid.

Materials/equipment provided to you:

l NI CompactRIO

l NI LabVIEW

l NTU Robot platform with 24V battery

l Hokuyo URG-04LX Scanning Laser Rangefinder

l AXIS 206 camera

l Wireless Router

l 24V DC to 5V DC Converter

Project Guidelines:

There are two main challenges involved in completing the obstacle course.

l How do I make the robot avoid the cones and stay within the field?

l How do I let the robot know where the waypoints are?

Of course, there are many ways to solve these problems. It will be up to you to use your creativity to implement such a solution. Here we will briefly investigate a few examples to help you get started.

Obstacle Avoidance:

Sensors such as ultrasonic sensors and infrared sensors may be used to tell a robot if an object is within vicinity of the sensor range. However, the accuracy of the sensors are limited, and unless they are used in an array configuration, most likely you will only be able to extract some vague information. LIDAR sensors, on the other hand, such as the Hokuyo URG-04LX Scanning Rangefinder, will be able to feedback an accurate “map” of its surroundings within its scan range. By using such a sensor, it will be easy to detect object presence accurately, and the robot can use this information to decide whether to drive forward, turn, reverse, etc. LabVIEW has example programs that can interface directly with the Hokuyo URG-04LX.

Fig 2. Hokuyo URG-04LX and resulting “map” of surroundings

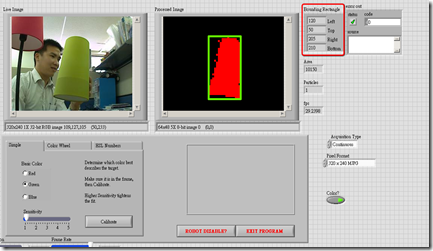

Waypoint Detection:

Object detection and tracking is usually done with a vision system in robotics applications. Because machine vision is highly dependent on ambient lighting conditions, there are also many possible algorithms that can be applied to object tracking. One method of object tracking is through the use of color. If the color of an object has high contrast with its surroundings, then robots can use cameras to recognize this unique color and isolate its relative position using some further vision processing. Robots can also use geometry to match an object, or even a combination of both. The markers for the waypoints will be brightly colored objects, so your robots can also use geometry to match, or even a combination of both geometry and color. LabVIEW also has example programs to directly interface with the AXIS 206 IP Camera.

Fig 3. AXIS 206 Camera and sample object tracking algorithm screenshot

Suggested Project Timeline

Week 1: Establish connection with CompactRIO and motors.

Week 2: Connect and test Hokuyo LIDAR Sensor.

Week 3: Connect and test AXIS 206 Camera. Test vision algorithm.

Week 4: Fine-tuning navigation algorithm.

Week 5: Final debugging, field trials.

Reminders:

l Always back up your programs!

l Before you start programming, always draw out a flowchart for your logic.

l Be careful of short circuits and live wires. Double check all wiring before powering on!

l When you’re not sure about what a VI in LabVIEW does, remember to use the online help and Example Finder.

l Start early! Leave plenty of buffer time for experimentation.

Be safe, be smart, and have fun.

John Wu

National Instruments Taiwan

wei-han.wu@ni.com

Link to Word document