Lately there has been alot of buzz about the Microsoft Kinect accessory, especially within the area of mobile robotics. Imagine, a 3D scanner for not 2000 USD, but 200 USD! Well, if you already happen to use LabVIEW, things just got a little easier.

This post is actually a response to the great work that Ryan Gordan has been doing over at his blog, http://ryangordon.net/. Ryan’s already put up a LabVIEW wrapper for the OpenKinect library … if he had not done this, my experimentation would not have been possible. So, kudos to Ryan.

You can get started pretty fast using Ryan’s LabVIEW example – grabbing the RGB image, 11-bit depth image, and accelerometer data off of the kinect. I know that other people have gone on to using the kinect for 3D scene reconstruction (i.e. MIT), I was just curious if LabVIEW could do the same. So, after some google searching, I found a LabVIEW point cloud example and combined that with Ryan’s example code. Here’s how to get started:

1. Get your kinect connection up and running first. Ryan has included inf files on his site, I have as well in my download link. Be sure to install Microsoft Visual C++ 2010 Redistributable Package. Check http://openkinect.org/wiki/Getting_Started for more information.

2. Run Ryan’s example.vi first to get a feel of how the program works. It’s the typical, but very handy, open –> R/W –> close paradigm.

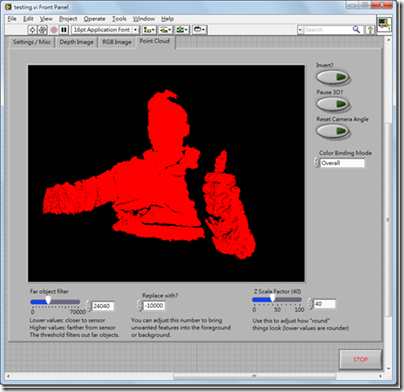

3. Now open up Kinect Point Cloud.vi. The tabs on the top still have your depth image and RGB image, but now I’ve added a point cloud tab.

4. There are some options that you can adjust while in the point cloud tab. There is a filter that lets you remove far objects, you can adjust the threshold on the lower left. “Invert” is to turn the 3D inside out, pause 3D holds the current 3D view, and in case you lose the mesh while scrolling around in the 3D view, use the reset camera angle button. BTW, use your left mouse button to rotate the 3D view, hold down shift to zoom in/out, and hold down ctrl to pan.

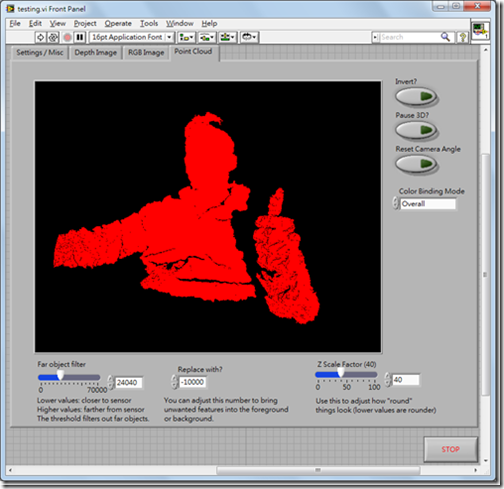

5. If you choose color binding mode to be “per vertex”, something interesting happens:

You can map the RGB values to the 3D mesh! Obviously there is some calibration needed to remove the “shadow” of the depth image, but that’s something to fiddle with in the future.

6. For those of you who care, I’ve modified Ryan’s “get RGB image” VI and “get depth image” VI so that they output raw data as well. Just wanted to clarify if case your subVIs don’t match up.

The idea behind displaying the 3D mesh is pretty simple, it’s alot like the pin art toy you see in Walmart:

The kinect already gives you the z-values for the 640x480 image area, the LabVIEW program just plots the mesh out, point by point. I had wanted to use the 3D Surface or 3D Mesh ActiveX controls in LabVIEW, but they were just too slow for real-time updates. Here is my code in LabVIEW 8.6, I’ve bundled Ryan’s files with mine so you don’t have to download from two different places. Enjoy!

Download: LabVIEW Kinect point cloud demo

Things to work on:

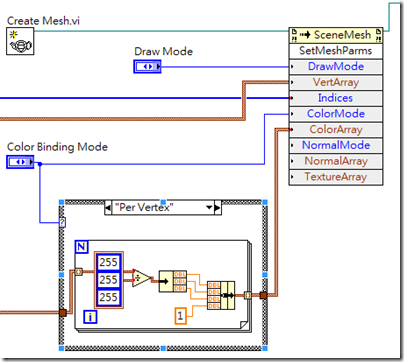

I am a bit obsessive about the performance of my LabVIEW code. For those of you who noticed, the 3D display will update slower if you choose “per vertex” for color binding. This is because I have to comb through each of the 307,200 RGB values that was already in a 3-element cluster and make it into a 4-element RGBA cluster so that the 3D SetMeshParms node can take the input with an alpha channel. If any of you know how to do this in a more efficient way, please let me know! This really irks me, knowing that I’m slowing down just to add a constant to an existing cluster.

I have also seen other 3D maps where depth is also indicated by a color gradient, like here. I guess it wouldn’t be hard to modify my code, it’s just some interpolation of color to the depth value. But that’s a little tedious to code, I prefer spending more of my time playing with 3D models of myself! (Uh, that sounded weird. But you know what I mean.)

A little about me:

My name is John Wu, I’ve worked at National Instruments Taiwan for about six years, now I’m at a LabVIEW consulting company called Riobotics, where we develop robots with LabVIEW not only for fun, but also for a living! Please leave your comments and feedback, I’d love to hear from you.

-John

Cool~~

回覆刪除John - you're my hero! This is AWESOME. I can't wait to see what you are able to do next.

回覆刪除good job!

回覆刪除Hi, is a really good job, actually, I did the program as same as you did a couple of weeks ago. But it works slow, so I gave up and didn't work in it anymore, but, now I see in your video that works perfectly fine, so I downloaded your version, but, still works slow, Do you know why?

回覆刪除Andres,

回覆刪除Couple of thoughts:

1. Slow computer?

2. Use highlight execution to debug, isolate the bottleneck.

If you're familiar with LabVIEW, it's easy to figure out. Or you can ask a friend to help you.

Hi John, Here is my video using LabVIEW and the Kinect with algorithm that I developed called Occupancy Grid, enjoy.

回覆刪除http://www.youtube.com/watch?v=-KXQ750qAWA

Looks good Andres, although i'm a little confused as to what you're doing in the demo. What are you trying to achieve, and how are you going about achieving it? If you had a little more information maybe I could put it up on a blog post for you.

回覆刪除P.S. I noticed Robert Bishop in your credits, looks like you're a LabVIEW fan as well (if you aren't yet, you soon will be.) Cheers!

Hello John Wu,

回覆刪除I am a student from the Netherlands and at the moment I am working on a project. It is my intention to locate an object with the Kinect using Labview. I have successfully installed the drivers from Openkinect and I have used the example file from Ryan Gordon as well as your Pointcloud files. I must say that you both have delivered some fine programming (I myself am not that good with Labview but I understand the basics). Do you think it is possible for me to make an VI were the pointcloud image that is produced will look like to the images from the link?

I want a point cloud from the whole range of the Kinect.

http://borglabs.com/blog/create-point-clouds-from-kinect

I am eagerly waiting for your reply and again thank you for your work.

Kaya Grussendorf

Hi Kaya,

回覆刪除If I understand you correctly, I think my program already does what you're asking for. If you click on my youtube video at the top of the post and navigate to about 1:17sec, you'll find that I've adjusted the "far object filter" slide on the bottom left on my VI to the maximum value, so this keeps all the values from the range of the kinect, including those in the background. The only reason why I made a threshold to eliminate the background was just to make the presentation neater, that's all.

If you scan to about 2:10sec in the video, you'll see that the point cloud data is actually quite dense, so this is very much like the picture you're referring to from borglabs. I hope this helps!

-John

John, Robert Bishop is my advisor in the project, I'm working with him, I'm trying to build a map in order to use it in robotics.

回覆刪除Hi Andres,

回覆刪除I understand now, looks like you are doing some map reconstruction with the kinect. Care to share your code with us? I am sure other people would find this information beneficial as well.

I am curious Andres, how are you rotating your kinect sensor? Are you using another rotary platform for the rotation?

回覆刪除Hi, Yes, I would like to share the code with you, and the whole community, like I said, the code still has some bugs, if you take a look in the NI forum, you can see the video there, for now, I going to finish the inverse model for the Kinect, in order to obtain a more accurate Map, I'm using the theory, and hopefully, I'm going to create a block for the occupancy grid map that I made for labview.

回覆刪除The link is below.

http://decibel.ni.com/content/blogs/MechRobotics/2011/03/28/labview-kinect-occupancy-map

Hi John,

回覆刪除Thanks for your reply , you were right I must have missed that part from the video. I have another question: What are your computer specs? Because both my laptop (2.0 GHz duo) and the computer on my university (pentium 4 2.6 GHz) are having trouble with showing the pointcloud image.

Kaya

Hi Kaya:

回覆刪除Try the "Reset Camera Angle" button on the top-right corner, sometimes the data is there, it's just not in view, if that doesn't work try using your mouse to scroll in the 3D window until you see something, might take some luck. I would check your grayscale depth image to verify that there is actually data being acquired. Hope this helps.

Hi John,

回覆刪除What I meant to say was that the point cloud images are being displayed, but it seems to be going very slow. The RGB and depth images are being displayed well like in your YouTube video, but the point cloud images will refresh about every 10 seconds, so that's much slower than in the video. That’s why I asked what your pc specs are, because I think the pc I am using is too slow.

Kaya

Hello Kaya,

回覆刪除Right now I am using a i5-M540 processor with 4GB RAM, Win7 64-bit. The graphics card on my laptop is a NVidia 3100M. I would assume your bottleneck seems to be the graphics card, as I know the LabVIEW 3D display goes thru DirectX for rendering. Try tweaking your graphics to see if you can get some HW acceleration. Are you using XP or Win7?

Hi John, I am using win 7 and the graphics card is a intel integrated card (the 4500) so you might be right about that. Is it possible to use hardware acceleration in Labview?

回覆刪除Kaya

Hi John there is one other issue I get when I choose Per Vertex as color binding mode. I get the error 1496 occurred at invoke node in kinect pointcloud.vi

回覆刪除Possible reason(s):

Labview: There must be enough data in the array for the given color or normal binding mode.

Do you know the reason I get that error?

Kaya

Hi Kaya,

回覆刪除As far as HW acceleration goes, I don't think you have to specify any parameters in LabVIEW. Just make sure Windows has the drivers to your graphics card, and Windows will take care of the rest.

As for your error 1496, I don't see the same error happening on my system. But if you're proficient in LabVIEW, you may want to check the data going into the "ColorArray" input of the Scenemesh invoke node. Experiment with different values to see if you still get the error.

For instance, when color binding mode "overall" is selected, it applys the color "red" to all of the points (R:1, G:0, B:0, Alpha:0) "Per Vertex" just means we want to take the color of each pixel in the RGB image and apply them to the corresponding points in the point cloud. I hope this helps.

Hi jhon what version of LabVIEW you are using imaq

回覆刪除For this vi I am using LabVIEW 8.6. If you're trying to ask if I'm using NI-IMAQ, the answer is yes. If you haven't got it, you can get it off of ni.com for free, or it's included in NI's Vision Development Module, if you have it.

回覆刪除作者已經移除這則留言。

回覆刪除Hi John, take a look of the new video that I made... I hope you like it.

回覆刪除http://www.youtube.com/watch?v=73vA_cTxGiU&feature=iv&annotation_id=annotation_926643

Hi Jhon !

回覆刪除My name is Nidal , i'm a french student in Metrology, and for my end of the year project i'm triying to impress my teachers in my " mow-budget" 3D scanner.

thank's to an article i read days agoo, i knew it was possible to scan an object with oly using the Kinect, also thank's to your article abouve, it proove's me that is quite possible and got awsoome results.

soo i'm hoping that you still repond to comments, and i'll ask you if i have some problems.

thank you for sharing !

sinceerly

Nidal

Hi john

回覆刪除My name is jeon kyung min.

I'm from KPU in korea.

I would like to know how you have connected to the computer with Kinect.

also I would like to know about the linkage with LabVIEW.

Please reply by e-mail.(jkm123@naver.com)